Unleashing the Power of Generative AI with Amazon Bedrock

Amazon Bedrock is transforming how businesses adopt Generative AI by simplifying access to top-tier foundation models via a single API. With Bedrock, developers can build, scale, and deploy AI-powered applications effortlessly—without worrying about managing infrastructure or training models from scratch.

Whether you’re creating conversational chatbots, streamlining workflows, or enhancing decision-making, Bedrock offers the foundation to innovate with confidence.

Also Read: Driving Innovation: How Cloud and Generative AI Empower Each Other

Why Amazon Bedrock Stands Out

- Serverless Architecture: Eliminates the complexity of resource provisioning and infrastructure management.

- Flexibility: Supports pre-trained or fine-tuned models tailored to specific business needs.

- Security and Compliance: Ensures data safety with AWS security frameworks, encryption, and compliance standards like GDPR and HIPAA.

- Monitoring and Logging: Provides accountability and transparency with AWS CloudTrail.

Bedrock’s fully managed service ensures businesses can leverage AI responsibly and at scale.

Why Do We Need Custom Models?

Custom models allow businesses to tailor AI to their unique needs by:

- Adapting Industry-Specific Language: Incorporating domain-specific terminology.

- Improving Niche Performance: Optimizing AI capabilities for specialized use cases.

- Embedding Proprietary Data: Integrating business-specific workflows and datasets for a competitive edge.

Also Read: AWS Solutions for Complex JSON Data Transfer from Amazon DynamoDB to Data Lake

Integrating Custom Models with Bedrock

Why integrate custom models with Amazon Bedrock?

It offers the best of both worlds: customization and simplicity.

- Seamless Integration: Unified access to custom and foundation models via Bedrock APIs.

- Fully Managed Service: Removes the burden of lifecycle or infrastructure management.

- Enhanced AI Features: Leverages Bedrock’s tools like Agents, Guardrails, and Prompt Flows.

- Unified API Access: Streamlines operations through the Amazon Bedrock Converse API.

Prerequisites for Custom Model Import

Before importing your custom model into Bedrock, ensure the following:

Also Read: Optimizing Costs with AWS: Why Every Business Should Consider It

- Supported Architectures: Llama (Meta), Mistral, Mixtral, and Flan T5.

- Serialization Format: Models must be in the Safetensors format.

- Required Files: .safetensors, config.json, tokenizer_config.json, tokenizer.json, and tokenizer.model.

- Precision Types: FP32, FP16, and BF16.

- Adapter Merging: Fine-tuned models (e.g., LoRA-PEFT adapters) should be merged with base model weights.

Step-by-Step Guide: Importing Custom Models into Bedrock

1. Download the Model

Obtain the model (e.g., meta-llama/Llama-3.2-1B) from Hugging Face or use Git LFS to clone:

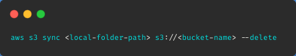

2. Upload to Amazon S3

Upload the model files to an S3 bucket in the same AWS Region where the model will be imported:

3. Import the Model in Bedrock

- Navigate to AWS Console > Bedrock > Imported Models.

- Provide the following details:

- Model Name: Name your model (e.g., Llama-3.2-1B).

- VPC Settings: Configure if needed.

- Import Job Name: Assign a unique job name.

- Source: Specify the S3 bucket location.

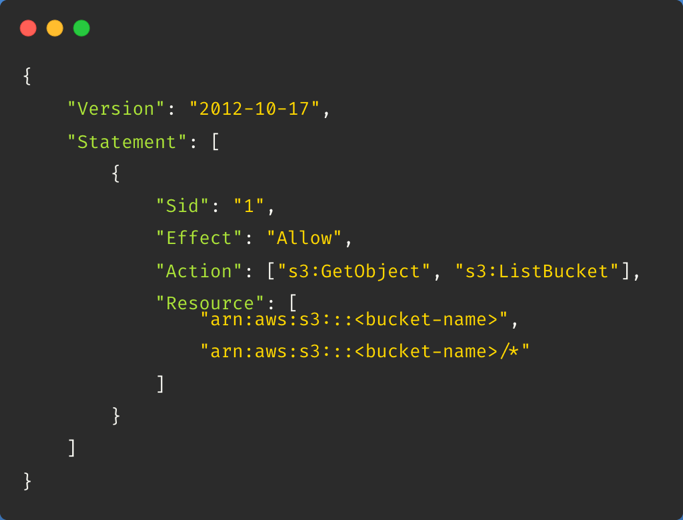

4. Authorize Access

Create a new IAM service role or use an existing one with the required permissions:

5. Submit the Import Job

Once submitted, the import job takes approximately 10 minutes to complete. Afterward, you can access your custom model via Bedrock APIs.

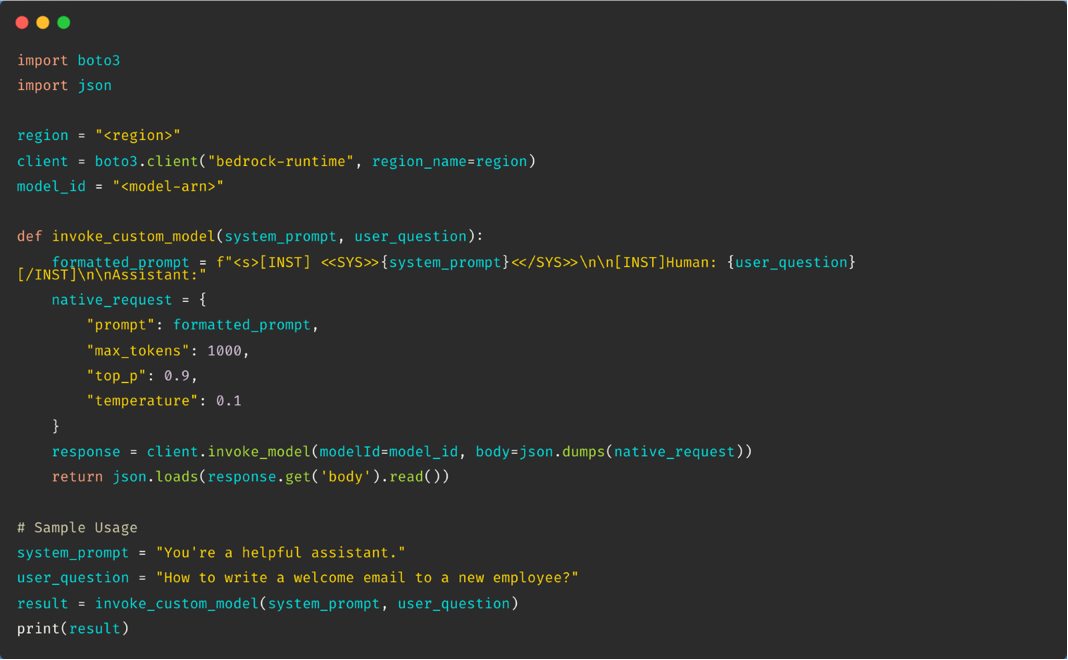

Using Custom Models with Bedrock: Python Example

Here’s a Python script to invoke your custom model:

Sample Input:

system_prompt = “You’re a helpful assistant.”

user_question = “How to write a welcome email to a new employee?”

Sample Output:

{

“response”: “Subject: Welcome to the Team!\n\nDear [Employee’s Name],\n\nWe are thrilled to have you join our team! Your skills and talents will be a great addition to our organization. Please let us know if you need any assistance as you settle in.\n\nLooking forward to working with you!\n\nBest Regards,\n[Your Name]\n[Your Position]”

}

Key Considerations

- Supported Architectures: Only Llama, Mistral, and Flan T5 are compatible.

- Architecture Verification: Check your model’s architecture in config.json.

- Conversion Tools: Use Llama Convert or Mistral Convert scripts to adapt unsupported models.

Also read: AWS re:Invent 2024: Where AI Stole the Show