How to Make DevOps Pipelines Smarter

Today, applications are creating a high demand for DevOps pipelines. Because the resources on which all these pipelines run are finite, pipelines need to run efficiently. Amid shrinking release cycles, businesses are demanding higher quality in less time. As the testing scope is increasing because of different variations, the demand from premier pipelines is greater; they must have an abundance of features, including compliance and security. With better management, your DevOps pipelines can be self-healing.

Rationale for Investing in DevOps

According to Acumen Research and Consulting, the Global DevOps Market size accounted for $7,398 million in 2021 and is forecast to reach $37,227 million by 2030. Some of the drivers behind implementing DevOps include the increased need for enterprises to minimize the software development cycle, accelerate delivery, streamline efforts between IT and operations, and reduce IT expenditures.

In a 2021 State of DevOps Report by Puppet, “83% of IT decision makers report their organizations are implementing DevOps practices to unlock higher business value through better quality software, faster delivery times, more secure systems and the codification of principles.”

Adopting DevOps enables enterprises to:

- Reduce management and production expenses

- Realize a quicker ROI

- Reduce costs with an efficient delivery system

- Save time with automation

- Reduce errors through collaboration and transparency

- Accelerate delivery times and deliver better customer service

- Increase productivity

Need of Smarter DevOps Pipeline

In the age of digital transformation, you are generating many applications like microservices backend, mobility apps, progressive web apps, IOT apps, etc. All these applications developed by multiple agile teams generate shorter release cycles and demand higher quality in less time. Your organization has a finite number of resources, and all these resources are shared across these agile teams.

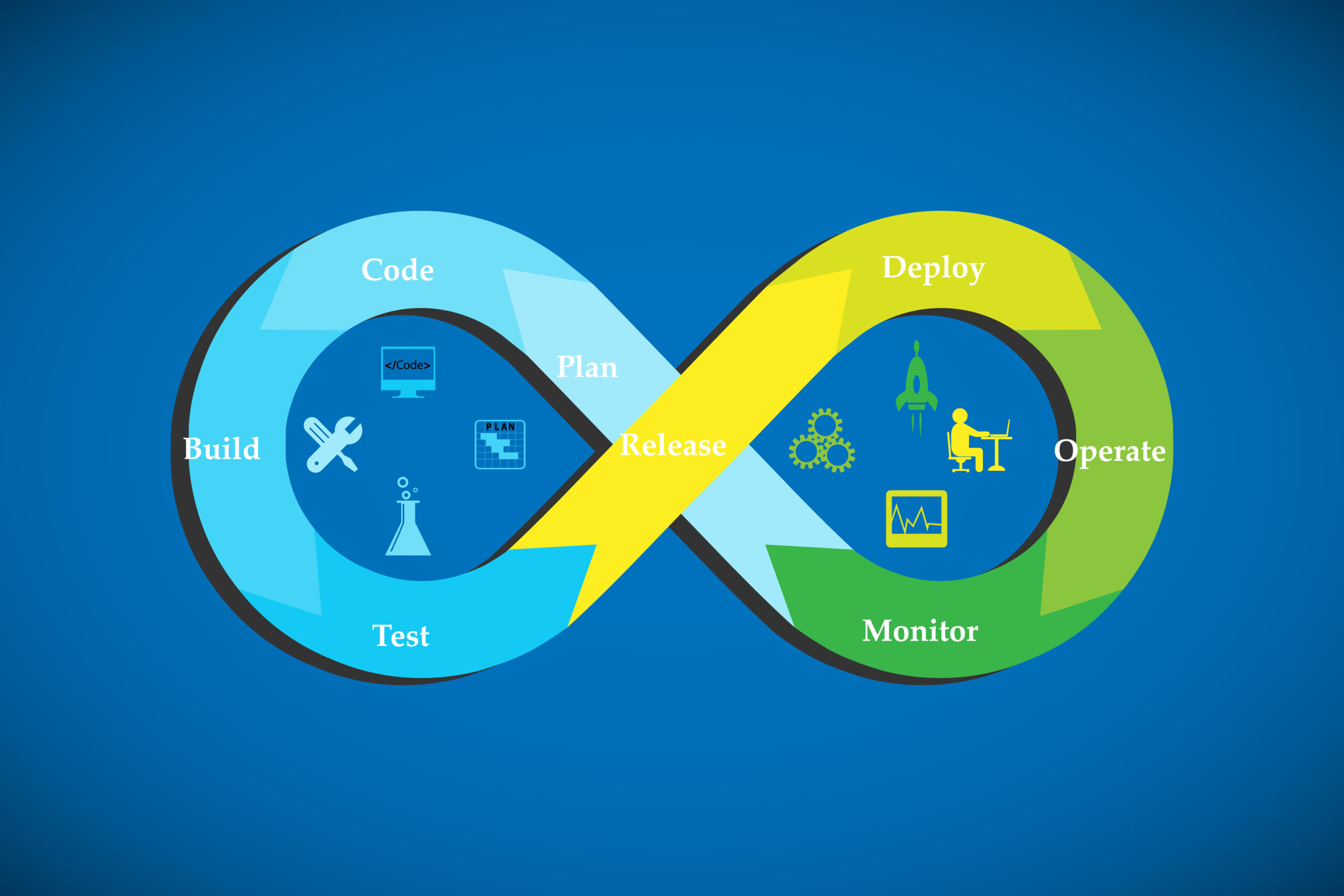

Modern day pipelines do not just build and deploy applications, they demand continuous functional testing, security testing, performance testing, compliance, and analytics. As the number of applications and their testing coverage grows, it is important to have smarter and more efficient pipelines.

To better understand, let’s take an example of a standard DevOps pipeline which does some quality checks, builds the application, deploys it on the test environment, and runs the smoke and regression tests. Let’s say, running the smoke and regression tests takes X minutes. Every time you change a piece of code, this pipeline triggers and runs the same smoke and regression tests, whether they are relevant to the piece of code you have changed.

Generally, each automated test case in your test suite is tagged with an application module. Triggering only those smoke and regression tests relevant to the changed application module makes the pipeline efficient. This can be achieved by harnessing the data generated by your application, pipeline, test automation framework and Jira. By organizing the data points from all these different tools, we can make our DevOps pipeline efficient and smarter. This also allows you to utilize your resources more efficiently (build machine and test infrastructure), saving time and money.

Approach for Harnessing Data for Efficiency

In continuation of the testing example, your pipeline stages, commit history, test management tool. and Jira have vast amounts of data. It is important to organize/categorize this data which can be later used to make the pipeline more efficient and smarter by performing risk-based analysis. With the substantial amounts of data available to organizations, it’s important to use the right data to train systems and streamline operations. The data needs to be extracted from different systems, processed, and transformed to make any value out of it.

There is a tactical piece to this equation and that’s getting the data into a system that runs smarter and more efficiently. The real key is working with your data scientists to model your data in a way so that it can train your AI and ML models to produce the right recommendations. Collecting data and modeling categorization is a prerequisite for the system to work smarter and produce with greater efficiency. The desired outcome is a direct result of the data and how you train your system.

Steps to Training ML Models

Before training your model, it’s imperative to start creating a unified way of representing that data. Your data will be in many forms: structured, semi-structured, and completely unstructured. The first step is to standardize your data. Massaging and balancing your data is particularly important so that it can be used more effectively. Cleaning up a dataset and removing unnecessary information allows for more consistent data.

In this example, our goal is to decide which tests to run based on the risk associated with the modified module. To calculate the risk, we need to know which module is modified, the defect trends for the modified module, and the severity of these defects. This information is not available from a single source, and it is not well structured.

For example, you will get the versioning information from your pipeline logs. Information about the module is modified and when modified is available from your source code management logs. Your defect and test management tool has information about the defect trend along with severity related information.

By transforming all this data and putting the data from these logs together, you gain knowledge of the version being affected with a date range. The module getting affected is available in the source code management tool’s log. By doing a simple text analysis of repeatable words within the log file, you can classify and label your data and get the insight about affected components.

And finally, the last step is risk prediction. Looking at your data from defect and test management tools, you can determine whether the module that you are working with is a high-risk or a low-risk module. Understanding your expectations and the risks gives you the knowledge to make predictions or start using that data on the right-hand side.

Keeping this together, your DevOps pipeline can now recommend which tests to run and predict the risk of your release. Another important piece is balancing your data. For example, some of the code check-ins had buggy code and your unit test failed. As the pipeline was unsuccessful due to quality checks failing, this information is not useful for the ML model and hence not considered.

This concept of making pipelines efficient and smarter is not limited to the testing stages but can be replicated to other stages of your pipeline by collecting, classifying, and transforming the data and applying it to your AI/ML model.

Getting Smarter

The demand for DevOps pipelines will continue to grow. The key to success is to develop practices that streamline and improve deployment. My advice to get started?

Begin by looking at your data pipelines and the data you are generating. Ask yourself, “Am I using my pipeline effectively? Can the data being generated be used to make my pipelines smarter?” Organizations investing in DevOps often realize numerous benefits including error reduction, faster delivery, and lowered expenses. For systems to work smarter and provide the aforementioned benefits, collecting and modeling data correctly is imperative.

Apexon helps businesses to accelerate their digital product and service initiatives. Apexon’s DevOps team has experience helping companies with their most difficult digital challenges and reducing test run times by 30% and resource utilization by 50%. If you’re interested in increasing your level of automation and need assistance with initial strategy and planning through delivery and support, check out Apexon’s DevOps services or get in touch with us directly using the form below.

This blog was cowritten by Amit Mistry and Deven Samant